Let's be honest. Your hiring process is probably a mess. A chaotic mix of notes scribbled on a legal pad, vague gut feelings, and post-interview Slack huddles where everyone tries to remember what the candidate actually said.

You're basically just flipping a coin and hoping for the best.

An interview evaluation sheet is your way out. It’s a simple but brutal tool that turns subjective, gut-feel impressions into objective data. It forces your team to assess every candidate against the same criteria, killing bias and helping you make smarter, faster hiring decisions.

Think of it as a scorecard for your most expensive business decision.

Why Gut-Feel Hiring Is Costing You a Fortune

That gut feeling you're so proud of? It’s often just a cocktail of unconscious biases in a clever disguise. We've all been there. Study after study shows that without a structured process, we naturally gravitate toward candidates who remind us of ourselves, not necessarily the ones who are best for the job.

Hope you enjoy spending your afternoons fact-checking résumés and running technical interviews—because that’s now your full-time job when you have to replace another bad hire.

The $500 Hello

Hiring based on who you "liked the most" is a recipe for disaster. Charisma doesn't write code, close deals, or manage a team. When you're only relying on memory and unstructured feedback, you aren't comparing candidates against the actual needs of the job. You're comparing your fuzzy recollections of their personalities.

This kind of chaos leads directly to some painful, expensive outcomes:

- Costly Bad Hires: The U.S. Department of Labor estimates a bad hire can cost a company at least 30% of that employee's first-year earnings. Ouch.

- Wasted Time: Your team burns hours in redundant interviews and agonizing debriefs, only to end up right where they started—confused and without a clear winner.

- Biased Decisions: You accidentally build a homogenous team because everyone keeps hiring people just like them, which is a death sentence for innovation.

The real cost of gut-feel hiring isn't just the salary of the person who quits in six months. It's the lost productivity, the hit to team morale, and the fact that you have to restart the entire expensive, time-sucking process all over again.

This isn't just about being more organized for its own sake. It’s about building a predictable system that consistently identifies top performers instead of just picking the most charming person in the room.

The solution is an interview evaluation sheet. It's not more paperwork; it's a strategic weapon. It forces you to get clear on what matters and turns your hiring process from a gamble into a calculated, repeatable system. Turns out there’s more than one way to hire elite talent without mortgaging your office ping-pong table.

Building a Truly Unbiased Evaluation Framework

Alright, let's build this thing. A powerful interview evaluation sheet isn't some generic laundry list of questions you downloaded from the internet. It's a strategic framework built around what actually predicts success in a role. This is where we move from flimsy impressions to rock-solid evidence.

Your first job is to define the non-negotiable criteria for the role. And I don’t mean "5 years of experience with React." That’s a lazy filter, not a measure of competence. I’m talking about the real skills, competencies, and behavioral attributes that separate a good hire from a great one.

This is where most companies drop the ball. They write down vague garbage like "good communicator" or "team player." What does that even mean? It’s completely subjective. Useless.

From Vague Ideas to Concrete Evidence

You need to translate every squishy concept into an objective, observable behavior. This forces every single interviewer to look for and score the exact same thing. No more post-interview debates where one person’s "great energy" is another's "lacked substance."

Let’s get specific. Instead of just listing a skill, define what excellent looks like in action.

Example for a Software Engineer:

- Vague Criterion: Problem-Solving

- Actionable Criterion: Breaks down complex technical problems into smaller, manageable sub-tasks. Clearly articulates trade-offs between different solutions (e.g., performance vs. maintainability) and justifies their chosen approach with data or logic.

Example for a Sales Director:

- Vague Criterion: Leadership

- Actionable Criterion: Provides specific examples of mentoring junior reps to meet their quotas. Describes a time they resolved a team conflict and what the outcome was. Demonstrates a clear process for performance management.

See the difference? One is a guess, the other is a checklist for evidence.

By defining these criteria upfront, you're not just creating an interview evaluation sheet; you're engineering a more predictable hiring outcome. This structured approach isn't just a "nice-to-have." Research shows that structured interview techniques can increase predictive validity by approximately 50% over old-school, unstructured chats.

This isn't about creating more work; it’s about making the work you're already doing count. You're replacing hours of subjective debate with minutes of data-driven analysis. It’s the difference between arguing over who you liked and agreeing on who demonstrated the most capability.

Define Your Core Competencies

Get your team in a room and hash this out before you even post the job. For any given role, you should agree on 4-6 core competencies that are absolutely essential. Any more than that and you risk analysis paralysis.

Here’s a simple, no-fluff process to follow:

- Identify Job Outcomes: What must this person accomplish in their first year to be considered a superstar?

- Translate to Skills: What specific skills and behaviors drive those outcomes?

- Write Behavioral Indicators: For each skill, write 2-3 sentences describing what "good" looks like, just like the examples above.

This process forces clarity and alignment across your entire hiring team. To see how these criteria can be structured, you might be interested in our guide to building a complete interview evaluation form. It’s the foundational work that makes the entire system effective, turning a subjective conversation into a repeatable, data-gathering exercise.

Designing a Scorecard That Actually Works

So, you’ve got your rock-solid criteria. Fantastic. Now comes the part where most evaluation sheets go to die a slow, painful death in a Google Drive folder. How do you actually measure these things without it feeling like you're grading a high school art project?

Let's be blunt: a simple '1-to-5' scale is useless without clear anchors. It’s just assigning a random number to a gut feeling. A “4” for communication means absolutely nothing if your colleague’s “4” means something totally different. When that happens, you're not comparing candidates; you're just comparing your interviewers' moods.

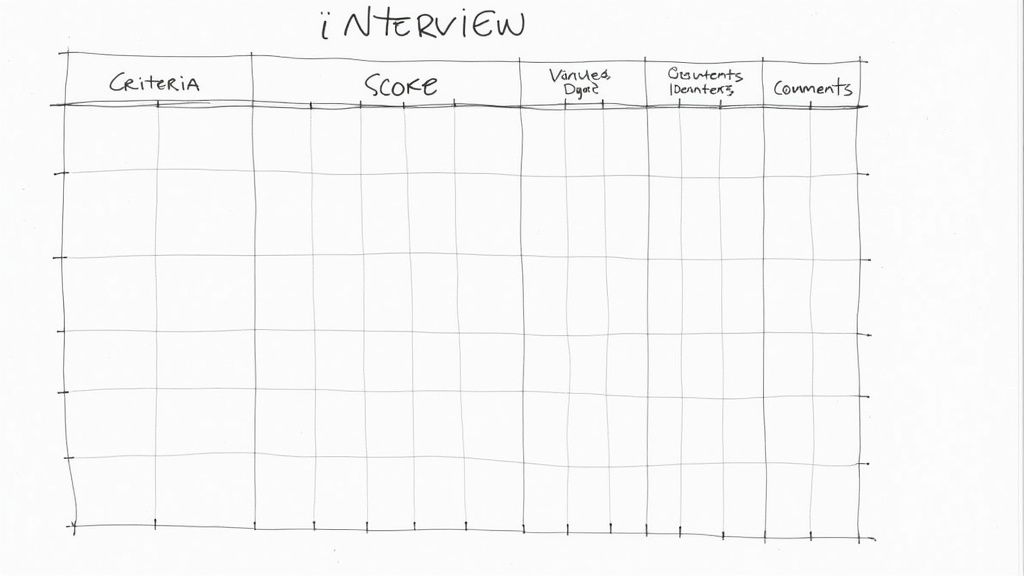

The whole point is to design an interview evaluation sheet so clear that any interviewer—even one pulled in at the last minute—can pick it up and provide data you can actually compare apples-to-apples.

From Numbers to Evidence

It’s time to kill the vague rating scale. A '5' isn't just 'good.' A ‘5’ is 'Candidate provided multiple, specific examples that not only demonstrated the skill but also exceeded the role's requirements.' We need to define what each score means in concrete, behavioral terms. This is where you connect your criteria to a rating scale that actually has teeth.

Your scoring anchors should describe the evidence the candidate provides, not your impression of them.

- 1 (Poor): Candidate could not provide any relevant examples or struggled to articulate their thoughts on the topic.

- 3 (Meets Expectations): Candidate provided at least one clear, relevant example that demonstrates baseline competency for the role.

- 5 (Exceeds Expectations): Candidate provided multiple, specific examples with rich detail, showcasing a deep command of the skill that goes beyond the job's core requirements.

This approach forces every interviewer to justify their score. There's no hiding behind a number. To dig deeper into the core principles of structured evaluations, it’s helpful to understand how to build effective performance systems using KPIs and scorecards.

The single most important rule? No score without a 'why.' Every rating on your sheet must have a mandatory comment section right next to it. This requires the interviewer to jot down the specific evidence—what the candidate said or did—that led to that score.

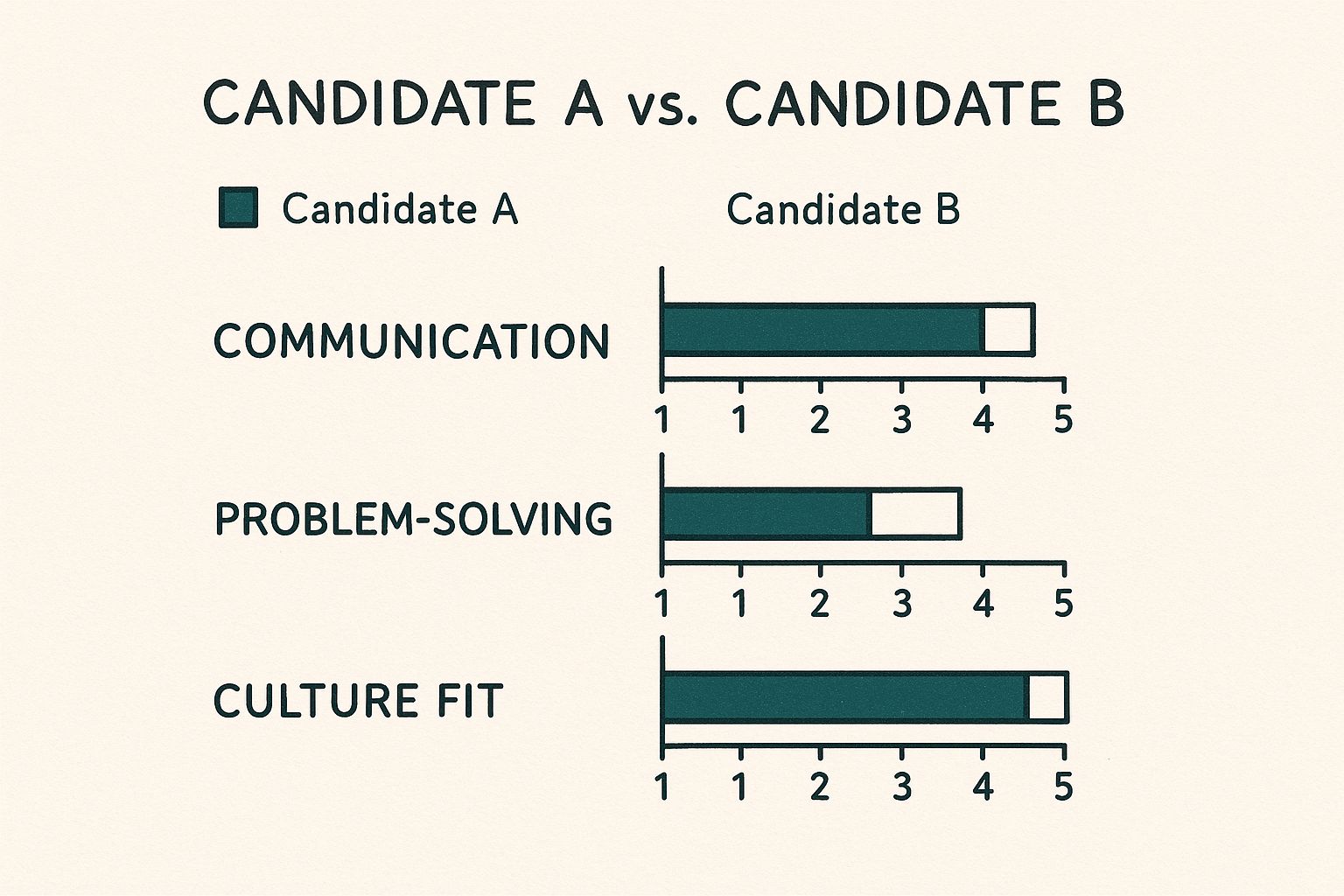

This infographic shows how two candidates might stack up visually when you’re scoring them against clear, evidence-based criteria.

Here, it's immediately obvious that while both candidates are strong communicators, Candidate A pulls ahead significantly in problem-solving. That’s a clear data point to bring into the debrief meeting.

Rating Scale Breakdown: Do This, Not That

The difference between a useless scale and a powerful one is all in the definitions. A vague scale relies on gut feelings, but an anchored scale demands proof. Here’s a side-by-side look.

| Score | Vague (Useless) Definition | Anchored (Actionable) Definition |

|---|---|---|

| 1 | Poor | Candidate could not provide a single relevant example. |

| 2 | Below Average | Candidate’s example was unclear or didn't fully address the question. |

| 3 | Average | Provided a solid, relevant example demonstrating baseline competency. |

| 4 | Good | Provided a strong example with good detail, meeting all expectations. |

| 5 | Excellent | Provided multiple, detailed examples that went beyond the role's requirements. |

See the difference? One is an opinion, the other is an observation. This simple change transforms your scorecard from a collection of feelings into a source of truth.

Formatting for Fast Decisions

Finally, think about the design. It has to be clean and scannable. Don't create a wall of text that makes your interviewers' eyes glaze over.

Use clear headings, plenty of white space, and maybe even a dedicated "Red Flags" box for any absolute deal-breakers.

Remember, this document is a tool, not a bureaucratic form. Make it easy to use, and your team will actually thank you for it. This is how you start getting consistent data that leads to confident, defensible hiring decisions.

How to Run the Interview Without Sounding Like a Robot

So, you’ve built a world-class interview evaluation sheet. That's a huge step. But if your interviewers clutch it like a script they're seeing for the first time, you’ve only managed to invent a very structured way to make great candidates feel awkward.

The sheet isn’t a teleprompter; it’s a set of guardrails. It's there to keep the conversation productive and on track, not to turn you into a hiring drone.

The real art is using that sheet to guide a natural, human conversation while you're secretly a data-gathering machine. This means your hiring team needs to know the criteria cold. They shouldn't be staring at a form, frantically trying to fill boxes. They should be making eye contact, asking thoughtful follow-up questions, and, you know, acting like a real person.

The Art of Note-Taking Without Being Creepy

We've all been there—the interview where the person across from you is just typing or scribbling furiously, never once looking up. It feels less like a conversation and more like an audit. Don't be that person.

Instead, train your team on what I call the "Listen, then Jot" method. It’s simple but effective.

- Listen actively while the candidate is talking. No typing, no writing. Just listen.

- Ask a follow-up question to show you’re engaged and digging deeper.

- Use the natural pause while they think to quickly jot down the key evidence.

This tiny shift in flow makes all the difference. The candidate feels heard, not just processed. Your goal is to capture concrete evidence—the what, when, how, and why of their examples—not a word-for-word transcript of the meeting.

Your interview evaluation sheet is a tool to fight bias, not a replacement for human connection. The moment the process feels more important than the person, you’ve lost.

To get the right data, you need a consistent interview process. This is especially true when navigating something as complex as the software engineer interview process, where structured questions are key.

The Post-Interview Debrief: A "No-Vibe" Zone

This is where your beautifully completed evaluation sheets truly earn their keep. A good post-interview debrief is a data-driven discussion, not a popularity contest. The first and most important rule is this: we don't talk about "vibes."

"Vibes" are where bias loves to hide.

Instead of hearing, "I just really liked her," the conversation needs to sound like this: "On the 'Problem-Solving' competency, she gave a specific example of refactoring a legacy system that reduced query times by 30%. Here’s the evidence I wrote down." See the difference?

Go around the room, criterion by criterion. Compare the scores, but more importantly, compare the evidence each interviewer collected. This is how you escape the all-too-common trap of hiring the most charismatic person and instead hire the most competent one.

Your evaluation sheets force a discussion based on facts, not just who told the best story or happened to go to the same college as you. It's how you make a decision, not just share an opinion.

Using AI to Supercharge Your Evaluations

So, you’ve built a killer interview evaluation sheet and your debriefs are finally based on evidence instead of just "vibes." You’re feeling pretty good about your process, right?

Well, the next evolution is already here, and it’s powered by AI.

Before you roll your eyes and picture dystopian robot interviewers, let’s be clear. We're not talking about replacing your judgment. We’re talking about giving it a superpower—another layer of objective data to stack on top of your already-structured process. (Toot, toot! This is where we come in.)

Think of it this way: modern AI tools can listen in on your recorded interviews (with permission, of course) and do the heavy lifting you just don’t have time for. They can whip up transcripts in minutes, analyze them for the key competencies you’ve defined, and even detect sentiment or flag inconsistencies.

Beyond the Spreadsheet

Let's get real about what this looks like today versus what's still hype. The promise isn't to have an AI make the hiring decision for you. The real, tangible value is in speeding up your review process and helping to scrub out even more unconscious human bias.

Imagine this: you've just finished a batch of video interviews. Instead of re-watching every single minute, an AI tool serves up a summary highlighting every time a candidate mentioned "project management" or "revenue growth." It flags that they used confident language when discussing their successes but became hesitant when asked about team conflicts. Suddenly, your evaluation sheet has a powerful new data source to work with.

This isn't about letting a machine call the shots. It’s about arming your team with insights they might have missed, ensuring every candidate gets the same level of scrutiny, and saving you from hours of tedious review.

This tech is gaining ground fast. As of 2025, about 45% of companies are already using AI tools to enhance their interview evaluations, automating scoring and analyzing candidate responses. But—and this is a big but—only 1% feel their deployment is fully mature. That tells you we're still in the early innings.

Where AI Shines and Where It Stumbles

AI is fantastic at spotting patterns across huge datasets. It can analyze word choice, response length, and even non-verbal cues in video interviews far more consistently than any human ever could. For teams conducting lots of preliminary screenings, this is a game-changer.

It’s especially helpful when reviewing the dozens of submissions you might get from an on-demand video interview, as it helps you quickly pinpoint which candidates to advance.

However, AI still struggles with nuance, sarcasm, and deep contextual understanding. It can't tell you if a candidate will be a great mentor or a creative problem-solver just by analyzing their speech patterns. That’s still your job. For a deeper dive, explore how to start Mastering Analytics in HR to transform your entire hiring process.

The goal is to use AI as a ridiculously smart assistant, not as a replacement for your hard-won experience. It handles the grunt work so you can focus on what truly matters: the human element and making the final, critical judgment call. We’re not saying we’re perfect. Just more accurate more often.

Your No-Nonsense FAQ on Evaluation Sheets

Alright, let's get into the weeds. You’re sold on the idea, you’ve maybe even drafted your first interview evaluation sheet. But now the real-world headaches begin. What happens when theory meets the messy reality of your team? Here are the straight answers to the questions that always come up.

How Do We Get Skeptical Interviewers to Actually Use This?

You don't win them over with a memo. You have to sell the benefit, not the process. Frame the evaluation sheet as a tool that makes their lives easier and their decisions more defensible, not as another piece of administrative busywork.

Let's be real, nobody enjoys those painful post-interview debriefs where everyone is trying to defend a vague "gut feeling." This sheet is their secret weapon. It gives them the data to say, "I rated them a 4 on Problem-Solving, and here's the specific example they gave that proves it." Suddenly, they're the smartest person in the room.

Run a quick training session. Show them how it eliminates guesswork and makes those debriefs shorter and more productive. When they see it as a shield against bad hires and endless debates, they’ll get on board.

What If a Great Candidate Bombs One Section of the Scorecard?

First, take a breath. The scorecard isn't a magical sorting hat that screams "REJECT!" It's a data collection tool, and a low score is just a data point—an interesting one, but still just one. It’s not an automatic disqualification; it's a giant, flashing sign that says, "Talk about this!"

This is where you earn your paycheck as a hiring manager. A low score on "Technical Documentation" for a senior engineer might be a crucial discussion point. Is it a skill they can learn quickly with a bit of coaching, or is it a non-negotiable part of the job from day one? The sheet doesn't make the decision; it just frames the conversation you should be having. It helps you weigh their incredible strengths against a potential—and maybe fixable—weakness.

A scorecard that results in a perfect score is probably a poorly designed one. The goal isn't to find flawless candidates. It's to understand a candidate's specific strengths and weaknesses so you can make a calculated, intelligent decision.

Can an Interview Evaluation Sheet Introduce New Biases?

Absolutely, if it's built by someone who doesn't know what they're doing. If you fill your shiny new sheet with subjective nonsense like "Good energy" or the dreaded "Cultural fit" without defining what those mean in behavioral terms, you've just created a prettier way to be biased. You’ve just formalized your bad habits.

The whole point of a good interview evaluation sheet is to do the opposite. It forces you to anchor every single criterion to an objective, observable skill or behavior that is directly tied to job performance. Done right, it minimizes bias, it doesn't create it. The structure is your defense against the lazy, gut-feel judgments that lead to homogenous, uninspired teams.

Ready to stop gambling on gut feelings and start making data-driven hires? Async Interview gives you the tools to run structured, asynchronous video interviews complete with evaluation scorecards and AI-powered transcriptions. Hire smarter, not harder.